Staff Augmentation: The Smart Way to Scale Your Workforce Efficiently The very dynamic business environment of the contemporary world calls for organizations to be agile in addressing increasing demands, technological evolution, and customer needs. One of the practices most in demand today is adopting staff augmentation services, which allows organizations to expand their workforce immediately and economically without a long-term recruitment obligation. With the practice, organizations have managed to stay agile, access quality talent, and deliver projects effectively. This article explains staff augmentation, how it works, types, benefits and drawbacks, and future directions. It compares staff augmentation vs outsourcing and managed services and says when the companies should use this model. What Is Staff Augmentation? Typically, staff augmentation is a flexible method of employment where firms hire outside talent on a project or temporary basis to access skill niches. Instead of hiring permanent employees, firms will hire professionals temporarily from a staff augmentation company to augment their current force. This model enables businesses to be specialists in core functions and tap expert professionals who deliver real-time value. The staff augmentation model is employed everywhere in IT, healthcare, finance, and other industries where expertise and quick responsiveness are needed. How Staff Augmentation Works? The staff augmentation process begins at the stage of acknowledging the talent gaps in an organization. After the needs realization of a project, the organization partners with a vendor in such a manner that the vendor supplies it with the talented workforce. The outsourced talent then integrates into the organization’s current teams and operates under the organizational structure of the organization. For example, for staff augmentation in software development, businesses can have an AI, cloud, or security-qualified individual cover a project role immediately without waiting for the lengthy hiring process. The process involves: Establishing the needed skillsets. Having a trusted vendor. Hiring and onboarding subject matter experts. Incorporating the dedicated human assets into the business in-house team. Types of Staff Augmentation Staff augmentation is classified under different categories, each addressing a type of business need: Commodity-Based Augmentation – Outsourcing staff for general purposes without domain expertise, e.g., admin support. Skill-Based Augmentation – Outsourcing experts with a well-defined set of skills, e.g., data experts, digital marketing specialists, or technical writers. Highly Skilled Augmentation – Outsourcing premium specialists, e.g., programmers, cybersecurity experts, or data scientists. Short-Term Augmentation – Contractual employment to satisfy short-term project requirements. Long-Term Augmentation – Long-term contracts to satisfy constant projects or remove skill gaps long-term. These categories uncover the flexibility of the staff augmentation model, allowing companies to increase by project complexity and length. Key Benefits of Staff Augmentation The benefits of staff augmentation transcend industries as well as company size. The most important advantages are: Access to Expert Professionals: Companies can utilize expert professionals, such as cloud experts or AI specialists, with no long-term commitment. Flexibility and Scalability: Companies can scale up or down based on project sizes. Cost Savings: Evades recruitment, training, and employee benefit expenses. Shortened Time-to-Hire: Time-to-hire is greatly reduced compared to traditional hiring. Increased Control: As opposed to outsourcing, organizations have direct control over staff augmentation personnel and thus adhering to internal procedures. Decreased Risk: Short-term talent acquisition reduces long-term costs with flexibility. For businesses that need to have a lasting dedicated development team, staff augmentation enables them to recruit necessary experts without the bounds of permanent hiring. Common Challenges and How to Overcome Them While staff augmentation is of immense advantage, there are some problems involved as well. One has to familiarize oneself with the pros and cons of staff augmentation in order to implement it successfully. Problems: Miscommunication due to geographical or cultural diversity. Overdependence on external talent. Probability of integration issues with the internal employees. Security threat associated with divulging confidential information. Solutions: Make open communication lines and project procedures. Keep a balance between internal and external personnel. Give good onboarding so that it is easy to work. Impose strict security measures to keep information and intellectual property secure. Book Your Free Marketing Consultation Staff Augmentation vs. Managed Services vs. Outsourcing Organizations keep staff augmentation vs managed services and outsourcing in mind and decide what to do. This is where they are different: Staff Augmentation: Used external talent to expand existing teams. The organization has complete control over the project management. Managed Services: Outsourcing whole functions or processes to a third-party provider who provides end-to-end. Outsourcing: Shakes out single projects or business functions to third-party vendors with maybe less direct control. The biggest distinction between staff augmentation vs outsourcing is control and flexibility. Augmentation offers qualified folks under your control, while outsourcing offers total responsibility to a vendor. When Should a Company Use Staff Augmentation? Staff augmentation works best in situations like: When an organization needs specialist skills not present in the company. When there isn’t much time and permanent recruitment is not feasible. When companies need extra staff for peak periods. For circumstances when long-term measures are impossible. For testing new markets or technology before full commitment to staffing recruits. Companies using this approach would generally refer to staff augmentation examples like software firms engaging app release developers or enterprises hiring cybersecurity professionals for short-term audits. Steps to Implement Staff Augmentation Successfully To attain maximum success, organizations need to follow the following steps: Identify Needs: Identify the skills required and project goals. Select a Vendor: Choose a seasoned staff augmentation company with a successful track record. Select Talent: Appropriately interview and filter applicants. Board Successfully: Educate and establish procedures for assimilation with native staff members. Manage Performance: Keep track of progress with regular reporting and feedback systems. Measure Outcomes: Quantify project ROI and success in attempting to improve in the future. Future of Staff Augmentation Demand for IT staff augmentation and services will grow faster with accelerated digitalization. More and

Healthcare Email Marketing: Building Trust and Patient Engagement Tactics As a fast-growing internet community, healthcare organizations are opting for email marketing for healthcare professionals as a fantastic method of communication, education, and building enduring patient trust. Healthcare email marketing tactics are distinct in that they are designed to share valuable, actionable, and timely information that leads to patient care and organizational development. With proper use, email marketing can fill the chasm between healthcare organizations and patients, happy, informed, and loyal patients. Why Email Marketing in Healthcare Matters? Usage of healthcare email marketing strategies doesn’t stop at promoting the business. It is an extremely useful tool for facilitating improved patient engagement, appointment reminders, health education, and good news. Since most of these patients sort through their mail on a daily basis, this platform provides numerous touchpoints, which activate patients via email and reduced no-shows and increased awareness of prevention care. And for doctor’s offices, doctor’s office email marketing sustains healthy patient relationships by providing specialty content like wellness programs, vaccine programs, and educational newsletters. Getting to Know the Target Audience Before staging an email campaign, it’s necessary to know your audience. Different patients have different needs depending on their conditions, age, and interests. Email segmentation in healthcare allows healthcare marketers to specifically target email campaigns to patients, such as sending special advice to diabetic patients or to pregnancy care recipients’ appointment reminders. By using what works best for each segment, healthcare organizations will build credibility and achieve improved open and response rates. Healthcare Email Campaign Types There are some types of personalized email campaigns for patients used for different purposes. Following mentioned below are the best of them: Health Education Newsletters: Email healthcare newsletter ideas, research headlines, and health tips to inform and engage patients. Appointment Reminders: Minimize no-shows by sending appointment reminders. Follow-Up Emails: Follow-up emails after an appointment increase patient satisfaction and continuity of care. Wellness and Preventive Campaigns: Encourage healthy living by offering diet tips, reminder injections, or seasonal reminders. Promotion Campaigns: Advertise new constructions, products, or telemedicine services. They achieve patient retention using email marketing and brand trust building. Healthcare Email Marketing Best Practices Compliance with healthcare email marketing best practices guarantees compliance, professionalism, and pertinence. Some of the primary rules are: Stay HIPAA Compliant: Do not share patient details. Save and send email always through secure routes. Get Permission: Do not send blind mails; patients have to opt-in before receiving messages. Be Relevant: Do not overly promote in messages—be value-centric while communicating. Segment Your Audience: Segmenting health ensures each patient receives messages in proportion to his or her specific need. Consistency but Not Excess: Infrequent emails re-initiate the engagement without overloading the patients. These measures deliver credibility and establish trust with constituents and health professionals. Book Your Free Marketing Consultation Writing the Ideal Healthcare Email The key to success for any campaign is making the message perfect. The ideal healthcare email is empathy, simplicity, and value in balance. Subject Line: First impressions count. Brief, pertinent, and emotional subject lines such as “5 Tips for Heart Health Every Day” prevail. Personalization: Reference patients’ names and compose messages according to their background or interest. Visuals and Tone: Employ clean design with a welcoming professional tone that instills confidence. Call-to-Action (CTA): Encourage a call to action—”Book Your Checkup,” “Learn More About Diabetes Care,” or “Join Our Health Webinar.” Optimization on Cell Phone: Ensure that the text can be read from a cell phone as most of the patients will be reading the emails using their smartphone. They all add up cumulatively to increase patient engagement through email and overall conversion. Tools and Automation of Healthcare Email Marketing Automating is required to be more efficient as well as tailored. Through healthcare digital marketing automation, hospitals and clinics are able to automate reminders, send follow-ups automatically, and automate programming messages. User-friendly popular software such as Constant Contact, Mailchimp, and HubSpot provide efficient automation without the cost of compliance. Automated functionality allows tracking of engagement, campaign targeting, and the removal of administrative hassles. Sample follow-up emails can encourage patients to come back for checkups annually or stay consistent with active treatment plans prescribed, both care continuity and trust-based. Measuring Email Marketing Success The success of your healthcare email campaign depends on measuring the right metrics. The most important KPIs are: Open Rate: It indicates how interesting your subject lines are. Click-Through Rate (CTR): It measures the number of recipients who clicked your CTAs. Conversion Rate: It indicates the number of recipients that have performed the desired behavior, i.e., booked an appointment. Unsubscribe Rate: It indicates whether your content is meeting audience expectations. Based on data analysis, campaign frequency can be customized for effectiveness, further segmented, and message designed to be more effective by health marketers. Common Errors to Avoid Even though Promiseland’s wealth in e-mail marketing has no end, there are typical traps that make it less effective. Steer clear of the following typical blunders: Shying Away from Personalization: Avoid using mail that’s generic in nature and may push away patients. Information Overload: give content in tight, brief formats. Compliance Amnesia: Ignoring privacy terms can kill reputation. Inconsistent Branding: Use a constant tone and visual brand. Avoiding A/B Testing: Continual testing helps determine the optimal thing that works with patients. Avoiding such errors enables you to make successful and trustworthy campaigns. Conclusion Nurturing healthcare provider email marketing in the course of today’s online age of care is not merely advertising establishing trust, better communication, and patient satisfaction. With the blending of patient email campaigns and

Cloud Native vs Traditional Applications: What’s the Difference and Why It Matters for Modern Businesses? The cloud native space has dramatically transformed the way that applications are developed and deployed by businesses. Understanding the difference between cloud native and traditional applications is important to organizations looking for competitive advantages in the ever-evolving market today. This architecture change isn’t a technology innovation alone it’s a complete paradigm shift in the way that application deployment and development get done. What Are Traditional Applications? Monolithic architecture pattern is vintage-style application design where the entire shebang user interface, business logic, and data access layers is one, tightly integrated unit. Traditional application architecture differs significantly in this structure composition. Traditional app development is primarily focused on developing applications to run on physical servers or virtual machines within on-premises data centers. These applications are upgraded in complete packages, with a lot of planning and downtime involved in the process. Cloud native application deployment has the brutal opposite of the same process, where even minor changes involve redeployment of the complete stack of apps. What Are Cloud-Native Applications? Cloud native app development includes distributed architecture that is specifically built to be deployed in the cloud. Cloud-native apps leverage micro services, containers, and orchestration tooling like Kubernetes. Cloud native micro services organize applications as more discrete, independent services that interact with one another using APIs so that they can be developed, executed, and scaled independently by different teams. Cloud native architecture relies on automation, CI/CD pipelines, and infrastructure-as-code principles. Its design views infrastructure as ephemeral and dynamic rather than static and rigid and therefore alters the way organizations are dealing with their tech stack. Key Differences between Cloud-Native and Traditional Applications Architecture: Cloud native vs monolithic applications calls out the key difference micro services vs monolithic architecture. Legacy apps are depicted as standalone blocks, while cloud native apps are comprised of different loosely coupled services. Scalability: Horizontal scaling within cloud native apps and each service will be scaled according to requirement through scalability in cloud native apps. Vertical scaling in legacy apps with more powerful hardware and complete system restarts. Deployment: Full redeployment windows and redeployment windows are what legacy applications need. Cloud native applications have zero-downtime constant deployment through rolling deployments and blue-green deployments, showcasing the deployment process in cloud native applications. Infrastructure: Legacy applications need predictable, static infrastructure. Cloud native applications can easily accommodate dynamic, elastic infrastructure that automatically scales with the workload needs. Advantages of Cloud-Native Applications Benefits of cloud native apps are both operational, cost, and tech. To start with, elasticity accommodates scalable expansion in the event of a traffic surge, which earns its top-of-class performance without provisioning for it. This is a direct address to the cost comparison: cloud native vs traditional apps since companies only need to pay for utilized resources. Increased resilience is another important benefit. When certain individual micro services collapse, others remain operational, reducing the overall system downtime. Classic monolithic crashes, in contrast, bring down entire applications. Development velocity accelerates at an accelerating pace. It is probably possible to develop two or more micro services in parallel without one team on top of the other, i.e., features and patches can be delivered faster. The performance comparison of cloud native and traditional apps will typically be in their favor since they are optimized for resources and have distributed processing architecture. Besides, cloud native designs support polyglot programming, and hence appropriate technology can be employed for every service rather than leveraging a single stack for the whole application. Book Your Free Marketing Consultation Limitations of Cloud-Native Approach With all the defects, cloud native applications introduce complexity. Distributed systems are more difficult to manage, and that implies there will be increased invasive monitoring, logging, and tracing. Containerization, orchestration, and micro services patterns require specialized knowledge in organizations. The initial investment in tooling, training, and infrastructure can be costly. Latency among services would impact performance if not properly designed. Security is harder to enforce because numerous service endpoints must be protected. Legacy vs cloud native systems requires a tremendous amount of work, which in most cases requires architecturally complete overhaul rather than seamless migration. Advantages of Traditional Applications Legacy application design is uncomplicated in certain circumstances. Small apps and normal workloads don’t need the additional overhead of cloud-native infrastructure. Development and debugging are normally easier in monolithic designs. Companies with long-term on-premises investment render traditional approaches more cost-effective in the short term. Monolithic development teams need not re-learn paradigms and can continue to remain productive. For applications where tight coupling or high inter-component communication is needed, monolithic designs reduce network overhead. When to Choose Which Approach Architecture choice is a matter of needs specific to a business. Use cloud native whenever you need to scale quickly, need high availability, or deploy often or expect spectacular growth. Start-ups and companies that do lots of things digitally value the flexibility of cloud native the most. Legacy approaches suit mature applications with relatively stable requirements, small budget to roll out infrastructure optimization, or very small teams without cloud native expertise. Simple flows and anticipated resource requirements may not justify cloud native sophistication. The Future: Transitioning from Traditional to Cloud-Native As businesses are moving to cloud native applications is all about competitiveness. Businesses are adopting hybrid strategies, refactor monoliths to micro services incrementally with the strangler fig pattern new capabilities as micro services without ever laying hands on the legacy core through modernization of traditional applications. Successful migrations entail cultural shift to DevOps, investment in automation, and phased migration strategies. Containerizing applications first will yield cloud native benefits without complete rewrites. Conclusion The chasm between cloud native and legacy applications runs far deeper than technical standards to business style. Whereas cloud native development offers greater scalability, resiliency, and responsiveness, legacy practices are best suited for certain usage patterns. An understanding of both paradigms supports the capability to make the correct decisions based on organizational goals, resources, and technical aptitude. With the evolution of cloud technologies, the

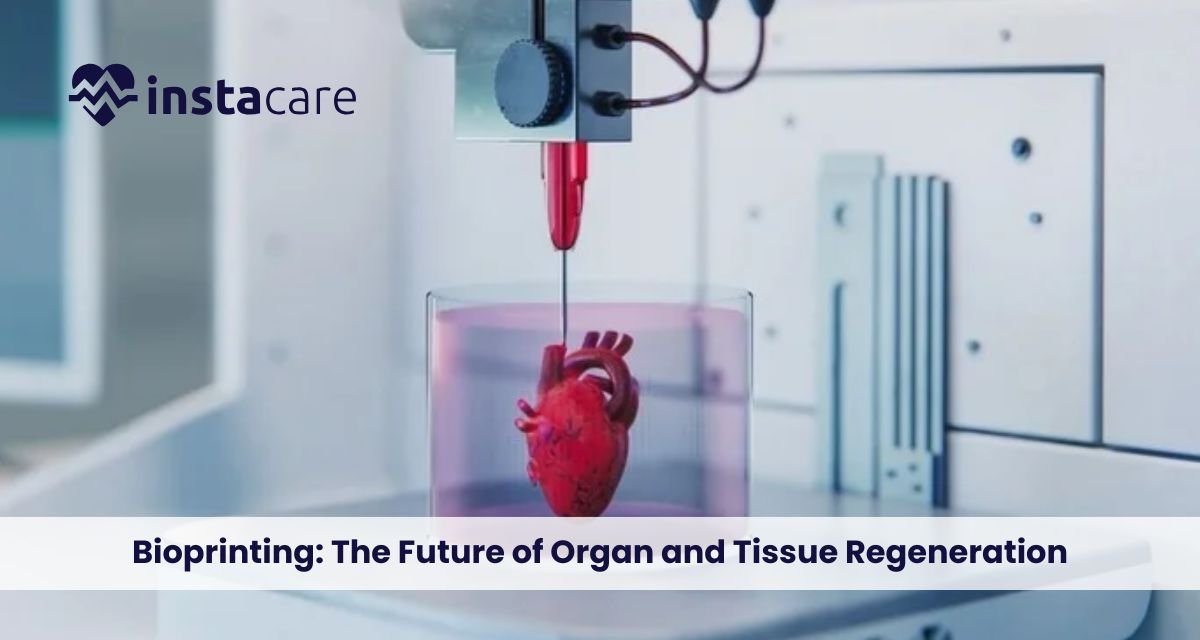

Bioprinting: The Future of Organ and Tissue Regeneration The recent decades have witnessed unparalleled advancements in regenerative medicine, and the advancement is most prominently seen with 3D bioprinting technology. The technology harmoniously brings biology, engineering, and cutting-edge advanced printing systems together to conceptualise and manufacture living tissue and even organs. The vision of printing functional biological scaffolds to repair or replace failed tissue is science fact and at our fingertips. As biomaterials science, printing technology, and stem cell technology advance, medical bioprinting can revolutionise the practice of medicine by revolutionising how doctors heal patients with organ failure, disease, or traumatic injury. What Is Bioprinting? Bioprinting is a form of 3D printing that attempts to print living organisms from living cells and biomaterials. Additive manufacturing is merely printing items with plastics, metals, or resins. Bioprinting consists of biocompatible scaffolds, growth factors, and cells. Bioprinting organs and tissues in layman’s terms is building living structures layer by layer that are copies of natural biological systems. The technology goes beyond the model of the human body to make research a reality. The technology is employed in individualised medicine, where implants and tissue grafts are tailored to suit the specific needs of a patient. With more companies venturing into bioprinting, more research is being stimulated to develop complex types of tissues like liver tissue, heart muscle, and even skin grafts to treat severely burned patients. How Bioprinting Works? To see the extent of this technology, first know how bioprinting is accomplished. It starts with the development of an imaginary model that is most often obtained through medical imaging such as MRI or CT scans. These are then copied in the form of a 3D blueprint to be printed. Some technicalities include defining the process of bioprinting: Preprocessing – Acquisition of patient-specific data, creation of digital model, and biomaterials and cell type selection. Printing – Printing layer by layer of the bio-ink containing cells, hydrogels, or growth factors. Post-processing – Printed structure incubated in a bioreactor to promote cell growth, vascularization, and maturation. The bioprinting process steps protocol enables tissues grown not just to replicate anatomy but even to work biologically. Materials Used in Bioprinting The most important aspect of effective bioprinting is choosing the material. The material, or Bioprinting materials, should be elastic, biocompatible, and also stimulate cell growth. Others that are actually hip are: Bio-inks: Immobilised live cells in hydrogels with proteins and growth factors. Synthetic polymers: Polycaprolactone (PCL) to form stiff scaffolds. Natural polymers: Gelatin, alginate, and collagen, to replicate the body’s extracellular matrix. Decellularised matrices: Cells are eliminated from the tissues, but not structural proteins. Material choice is based on the final use, bone, cartilage, vascular networks, or gross organ morphologies. Applications of Bioprinting The applications of bioprinting are many and continue to grow. Some of the major ones are: Tissue Engineering – Building bioprinted tissue such as cartilage, skin, and bone for regenerative medicine. Organ Transplantation – Functional kidneys, livers, and hearts for temporary use to cover the gap of donors. Drug Testing – Tissue printing to test the safety and effectiveness of drugs without any use of animals. Cancer Research – Tissue printing for disease modelling and treatment with new treatments. Cosmetic and Reconstructive Surgery – Personalised skin grafts, ear cartilage, and bone skeletons. Bioprinting medicine would then be able to close or minimise transplant waiting lists and even accelerate recovery of the patient. Book Free Demo Benefits of Bioprinting Advantages of bioprinting as compared to traditional medical procedures are many. The most significant advantages are: Personalization: Organs and tissue are printed to precisely match a patient’s anatomy as well as genetic requirement. Reduced Rejection Rate: Composed of a patient’s cells, thus rejection by the body is impossible. Faster Healing Rate: Implants and grafts made by computer are easily incorporated into host tissue. Ethical Benefits: No animal and human donor organ testing is required. Benchtop Benefits: Enables scientists to research disease processes with the help of high-definition tissue models. Not only avoiding horrid organ deficiencies during donation, but curing millions of patients worldwide, bioprinting can change lives. Restrictions and Limitations As promising as the Challenges of bioprinting is, there are a couple of extremely critical issues with bioprinting that must be addressed first before it stands any chance of being a standard medical procedure. Vascularization: They’ve already managed to print tissue seeded with networks of vessels to provide oxygen and nutrients. Organ Structure Complexity: Simple tissue like skin is straightforward to print, but a kidney or a heart is much more complicated. Regulatory Obstacles: Without international guidance and regulation for bioprinted product clearance. Expensive: Very sophisticated material, technology, and expertise are expensive. Scale-up Ability: Yes, very probable to produce lab-scale tissue but scale up to large-scale mass production is not feasible. It remains ostracized at the margins by scientists but will be years behind schedule by the time it becomes ordinary clinical therapy. Ethical and Legal Concerns The ability to produce living tissue also raises Ethical issues in bioprinting. They are man’s identity crisis, fear of cloning, and abuse of technology. For example, to whom does a right belong on a printed organ, the creator, the hospital, or the patient? Equity of access is also compromised. Reduced access to only affluent patients will augment health inequity. Governments and international agencies have to make sure there is some policy and guidelines for equitable, safe, and ethical access to this technology. The Future of Bioprinting There is hope for the Future of bioprinting. Scientists can already envision the day transplant lines become a footnote in books and personalised medicine the norm. The coming decade will see us standing at the edge of clinical trials for functional transplantable organs, ordinary use of printed tissue in drug discovery, and other advances in regenerative medicine. Besides it, Bioprinting vs 3D printing Organ distinctions will appear when industries overlap. Mechanical objects are

3D organ printing technology has been the most exciting advanced medicine development. There are millions of patients all over the world in need of donor organs, yet others never find a matching donor on time. Conventional transplants are hampered by donor shortage, immune rejection, and healing times. 3D bioprinting process offers another option where doctors print an organ using a patient’s cells, minimizing the risk of rejection and goodbye to donor reliance. No longer science fiction. From lab-grown tissues to working models of a 3D printed heart and 3D-printed liver, the potential is building fast. The ability to print organs on demand could transform the future of transplant medicine and regenerative therapy. In this article, we’ll explore how 3D printed organs work, their Benefits of 3D printed organs, current progress, limitations, ethical debates, and the expected future of this game-changing technology. What Are 3D-Printed Organs? 3D printed organs in medicine refer to bioengineered organs printed through printing technology to produce viable living cells. Differing from machine implants, the organs mimic the shape and function of real human organs. Scientists already have the bioprinting of minor structures like ear cartilage, blood vessels, and skin. Major organs are at the testing stage, but these are the future’s next giant leap for medicine. It is possible to translate it to bespoke organs tailored specifically to each patient. How 3D Bioprinting Works? In order to understand how the process of 3D bioprinting functions, one must understand how it is an imitation of nature. Regular 3D printing involves metals or plastic, but bioprinting involves a special form of “bio-ink,” something composed of cells and biomaterials. Let us explain the process step by step: Cell Harvesting – They are harvested from the patient, preferably stem cells, as they may be reprogrammed into another form of tissue. Bio-ink Appointment – They are mixed with biomaterials or hydrogels, in which they can nourish and develop themselves. Printing – Bio-ink is loaded into a 3D bioprinter, and it prints the material layer by layer, using a computer model of the organ. Maturation – Cultured printed organ is cultured in a bioreactor, where it develops and functional tissue. This bioprinting method of medicine enables scientists to construct organ models with a level of accuracy unparalleled in history, replicating the true tissue structure. Even the blood vessel networks and heart valves have been printed by other scientists, instrumental components for the completion of whole-scale organs. Advantages of Organs 3D Printed The advantages of 3D-printed organs far outweigh the problem of the shortage of donors. Some of the most notable advantages include: Smaller Waiting Lists: No more waiting years for patients to get transplants. Organs would instead be printed. Less Immune Rejection: Because the organs are printed from their own cells, there is less chance of immune rejection. Quicker Processes: In-body organs reduce waiting times leading up to the surgery. It is these advantages that render organ printing the future’s regenerative and customized medicine, or at least so say most experts. Future Applications and Success Stories A completely 3D printed organ transplant into the human body remains a possibility, but we do have some pretty neat stuff that indicates how close we’re getting: Skin Printing: Bioprinters now print burn victims’ skin grafts for reconstructive surgery. Cartilage and Bone Printing: For orthopedic surgery, joint reconstruction, and dental implantation. Vascular Structures: Scientists already print blood vessels, one of the largest of the organ development challenges. Heart Prototypes: A 3D-printed infant heart with cells and chambers has already been printed in laboratory tests. Liver Tissue Models: Bioprinting of liver tissue minimizes reliance on animal testing. Such a feat indicates that although whole organ transplant is years ahead, the technology of 3D printed organs for human life is increasingly being developed. Book Free Demo Limitations and Challenges There are still some challenges in 3D organ printing that are yet to be overcome with all the sudden progress: Organs’ Complexity: Organs such as kidneys and hearts possess complex networks of blood vessels, and hence they are more difficult to recreate. Longevity and Viability: The tissue needs to survive and operate for months, and maybe even years, after implantation into patients. Scale: At least for the time being, it is still beyond our means to bioprint huge, working organs on a regular basis. Cost: Bioprinting is done on costly machines, material, and professionals. Regulations: New healthcare technology undergoes thorough approval procedures before they are practiced on a large scale. These are the sorts of problems that mean organ bioprinting as brilliant as it is will be a couple of years of R&D before it becomes business as normal in hospitals. The Future of 3D Printed Organs The Future of organ bioprinting looks very bright. Within the next 10–20 years, experts say, implantable kidneys, hearts, and lungs will be manufactured through Bioprinting in healthcare. It can eventually end organ shortages and even become a donor-based transplantation option. Those future research will be hybrid in the sense that the native and synthetic tissue are merged into a composite. That is where 3D printed tissue engineering enters the scene, where not only are the destroyed organs replaced or fixed but the whole transplant is avoided. The second is tailored medicine organ printing with the exact dimensions to suit a specific patient’s biology and anatomy. That can reduce results and recovery time and improve transplants, and make them more secure. Ethical and Social Considerations Scientific progress has significant ethical issues. Availability: Will 3D printing of synthetic organs be available for all patients, or just the affluent? Regulation: Who will oversee safety and certification of artificial organs 3D printing? Impact on Donation Programs: If organs are printed, what does the donation program need to contribute? Patents and Ownership: Can firms patent human tissue? Public acceptance will also determine how quickly 3D printed organs become part of medical routine. A relationship of

Brain-Computer Interfaces: The Future Human-Technology Interface A future where it is no longer fiction to control computers, prosthetics, or even vehicles with mere thoughts. No longer the domain of science fiction narratives, it’s the reality today through Brain-Computer Interfaces (BCIs). As the intermediary between human imagination and electronics, BCIs are transforming man-machine interaction. From assisting paralyzed patients to enabling the restoration of senses lost because of disease or injury, the technology can revolutionize how we work, live, and interact. There are many brain-computer interface advantages and disadvantages, relating mainly to ethics, privacy, and security. This article describes what BCIs are, how brain machine interfaces function, their kinds, uses, pros and cons, and the future of brain computer interfaces. What Is a Brain-Computer Interface? A brain-computer interface (BCI) or brain-machine interface (BMI) or neural interface is an interface that links the brain to an external system. It allows the movement of information from the human brain to computers without any physical movement. That is, BCIs map brain waves’ electrical signals created by neural activity onto computer instructions that operate external devices. They could be robot arms, wheelchairs, communication programs, or virtual worlds. The general application of BCI technology is to restore or supplement human function. BCIs, for example, enable spinal cord injury patients to regain access to devices or to communicate even under severe physical impairment. How Brain-Computer Interfaces Work Learning about how brain-computer interfaces work starts with observing how the brain sends messages. Our brain creates electrical impulses as neurons fire. BCIs detect these and translate them into action commands. The process in general has four general stages: Signal Acquisition Sensors detect brain activity using scalp electrodes (non-invasive) or even inside the brain (invasive). The sensors detect electrical activity as we move or think. Signal Processing Store data that is typically noisy. Spurious signals are filtered out, and meaningful features for a particular thought or movement are presented to the system. Translation Algorithms Machine learning algorithms interpret the patterns in computer language. For instance, a hand movement concept can be translated to robotic arm movement. Output and Feedback The decoded signal will be used to drive an external computer terminal or device. Visual or sensory feedback is provided to enable users to correct their thinking and enhance control accuracy over time. Everything is done in real time, normally on the order of milliseconds, to enable users to control equipment nearly as naturally as they move their own limbs. Types of Brain-Computer Interfaces There are three general types of brain-computer interfaces, differentiated by the way signals are accessed: Invasive BCIs They implant electrodes in brain tissue. They yield the most and highest signals but are invasive. They are applied primarily in brain-computer interface for paralysis or advanced medical brain-computer interface research. Partially Invasive BCIs Electrodes are implanted on the brain’s surface (below the skull but not inside the tissue). They provide a balance between precision and safety, implanted to monitor seizures or complicated motor activity. Non-Invasive BCIs These are the most common and safest types of brain-computer interface technology, employing EEG caps or scalp-mounted sensors. They are appropriate to consumer markets, gaming, and research, but compromise on signal quality due to skin and skull interference. Book a Free Demo Applications of Brain-Computer Interfaces Brain-computer interface applications include medicine, industry, and entertainment. The most likely of them are: Medical Rehabilitation BCIs offer patients partial motility or communication control according to the computer pointer and speech output. Spinal cord patients are able to direct robots or the arms of wheelchairs using their brains only. Neuroprosthetics Neural prosthetic limbs incorporated by neural signals enable amputees to control prosthetics, which is more independent and mobile naturally. Virtual Reality and Gaming Non-invasive brain computer interfaces enable gaming, where players control characters through their brains, providing fully immersive games. Mental Health and Neurotherapy BCIs are being utilized to cure illnesses such as depression, anxiety, and epilepsy by monitoring and stimulating parts of the brain. Military and Research Defense personnel working in the military and in research are developing BCIs to enable communication in dangerous zones as well as improve attention or cognition. Brain-Computer Interface for Paralysis One of the most surprising uses, at least, is one in which paralyzed individuals can write, command robot arms, or even walk using exoskeletons, all via direct neural interface control. Brain computer interface companies such as Neuralink, Synchron, and Kernel spearheading brain computer interface innovation are leading the way to create tools that draw on neuroscience, artificial intelligence, and engineering to set the limits of what human beings can accomplish. Advantages of Brain-Computer Interfaces The increased demand for BCIs is due to their enormous advantages. The greatest brain computer interface advantages are: Restores Mobility and Independence: Restores paralyzed patient mobility, providing them with control of prosthetic or communication systems. Improves Quality of Life: Enables disabled people to communicate better with the virtual world. Enhances Human-Machine Interface: Makes control of machines possible without physically interacting with them. Makes Medical Research Smooth: Allows researchers to gain more knowledge about brain activities and diseases. Improves Performance: BCIs can ultimately speed up memory, attention, or reaction time the “neuro-enhanced humans.” Challenges and Ethical Issues Though the potential is so great, BCIs are also associated with ominous Ethical issues in brain computer interfaces that must be resolved before they become mainstream. Data Privacy and Security Brain data is about as personal data as one can get. Breach of access or abuse of neural data can compromise mental privacy. Informed Consent Users must fully know the risks, especially with invasive technology such as those involving brain surgery. Accessibility and Affordability Existing BCI systems are expensive and advanced, excluding access to

Technology in Mental Health: Transforming Care Through Innovation and Connectivity Our technology-engulfed era, where technology is a valuable asset in the management of mental illness, has seen rising cases of global stress disorders, Technology as anxiety and depression. Technology is now providing new avenues through which support is becoming more convenient, tailored, and effective. Use of technology in mental health shifted from promotional campaigns today to welcoming advanced gizmos, apps, and sites providing access to therapists, emotion tracking, and instant support. From artificially intelligent therapy to online mental care services, technological advancement is revolutionizing the way people receive help, the way that practitioners work, and the way that the world views mental illness. Access and the connectivity that the technologies provide are erasing the old barriers and allowing millions of people to receive help from home. Role of Technology in Mental Health The role of technology in mental health is in three ways with accessibility, personalization, and prevention. Treatment by media that are digital can be done irrespective of where one is based or their economic status they have. All the technology-based form of access to care allows one to act early and follow up at all times, something one requires in dealing with chronic psychological disorders. Technology enables individuals to monitor symptoms and engage in behavior with smarter devices, and the patient is able to conduct therapy sessions or self-help exercises without stigma. Digital Mental Health Platforms and Apps Among the most significant innovations is the development of apps and websites offering real-time mental health care and self-help information. These mental health applications consist of mood charts, guided meditation, CBT programs, and peer support chat groups. These online mental health sites have opened the profession of access therapy by making common occurrences of counseling less expensive financially. Examples include well-known apps such as Headspace, Calm, and BetterHelp with millions of subscribers globally. They enable individuals to monitor progress, establish goals, and monitor symptoms via direct Digital interventions for mental health. The advantage of technology in mental illness management is found within the device itself with the portability and ease of use. People who, for fear of social stigma or domicile, never mustered the courage to visit a therapist are now able to go about obtaining service in private at their own leisure. Teletherapy and Online Counseling The COVID-19 pandemic brought teletherapy and online counseling to the forefront faster, and now online sessions are an everyday mode of therapy. With secure video calls and chat interfaces, professional therapists can administer online therapy, uninterrupted, even in the case of lockdown or an emergency. It benefits both the patients and therapists. Patients can come without having to drive, and therapists can work with additional clients. Teletherapy also encourages honesty and ease because patients feel more at ease in revealing things from places they know. The efficacy of internet therapy and teletherapy has been supported in numerous studies of research as well as with single-case therapy for the treatment of anxiety, depression, and trauma. Artificial Intelligence in Mental Health Applying Artificial intelligence in mental health to cure mental illness is a leap of faith. AI programs are founded on patterns in verbal behavior, facial expression, and written communications to make assumptions about emotional status and symptoms of potential mental illness. For example, chatbots like Woebot or Wysa use natural language processing to provide empathetic support to users in coping behavior practice and crisis intervention. Mental health chatbots are available 24/7, with the hope of providing individuals in crisis with access to the continuity of care. Artificial intelligence also helps physicians forecast patient outcomes, tailor therapy plans, and identify potential high-risk patients who need to be treated with priority. With the passage of time, technology will certainly advance even more to a point where the use of AI will become an enabling Digital mental health tools for therapists and render the diagnosis more precise and therapies more personalized. Book Free Demo Wearable Technology and Mental Well-being Another potential is Wearable technology for mental health, such as biosensors and smartwatches, monitoring heart rate, sleep, and stress levels. These allow patients and professionals to monitor emotional and physiological reactions on a daily basis. In addition to Remote mental health monitoring, wearables enable prevention and early intervention, and enable one to actively care for emotional well-being instead of responding. Virtual Reality (VR) and Augmented Reality (AR) Therapies Virtual reality in mental health treatment is revolutionizing exposure therapy, mindfulness training, and relaxation procedures. VR envelops patients within a virtual world to expose them to their fears in a safe environment, to teach them how to manage them, or to take them through meditation. For example, PTSD patients are exposed to traumatic experiences in controlled virtual reality because the therapists can lead them through re-learning and reinterpretation of their response. Likewise, social anxiety patients are able to rehearse social competence in a simulation environment so that they build confidence before exposure. Big Data and Mental Health Research Big data psychology is revolutionizing the manner in which researchers perceive patterns of emotional well-being. By analyzing data gathered with online treatment for psychopathology, wearable sensor-based sensors, and online social media interaction, researchers have been able to examine scales of large behavior patterns. This data can be used to recognize early warnings for mental health crises, monitor the population, and optimize treatment. Big data findings have the potential to transform public health policy but also bear chilling Data privacy in digital mental health concerns over electronic mental health. The Future of Technology in Mental Health The Future of technology in mental healthcare is bright with innovation to customize it and make it affordable. Application of AI, VR, and wearables in daily life can potentially diagnose and cure mental illness years in the future, when the disease is still in its infancy. Predictive analysis can be carried out by therapists and applied according to pre-intervention on the basis of behavioral data. Intervention can

Digital Twins in Healthcare: Revolutionizing Patient Care Through Virtual Replicas Healthcare stands at the threshold of revolution when virtual replicas of patients, organs, and hospitals are redefining medicine. Digital twin technology, originally formulated for application in manufacturing and aerospace, is now redefining clinicians’ diagnosis, treatment planning, and outcome prediction for patients. With dynamic, data-driven virtual models, digital twins enable a degree of precision never before attainable in patient care as well as medical research. What Are Digital Twins in Healthcare? Healthcare digital twins are virtual copies of physical assets patients, organs, medical equipment, or healthcare facilities in general operated with real-time inputs. Static representations of health simulation, rather than dynamic copies, do not do that. Dynamic copies of these physical assets develop simultaneously with the physical assets themselves, with inputs from medical images, wearables, electronic health records, and biosensors. A patient digital twin can integrate genetic information, behavior, medical background, and real-time physiological feedback to develop a complete virtual representation to mimic treatment scenarios and predict disease development with no risk to the actual patient. How Digital Twins Work in the Healthcare Ecosystem? Digital twins work by utilizing advanced fusion of technologies and data sources: Data Integration and Aggregation: The construction begins with the aggregation of data from wearable biosensors, electronic health records, lab data, medical imaging, and genomic sequencing. Healthcare IoT and digital twins exist in a romantic marriage wherein connected devices exchange real-time physiological information in streams to the virtual model. Model Building and Renewal: Advanced algorithms convert this information into virtual copies of high fidelity. Digital twins never become disassociated from their physical counterparts as do conventional models, due to continuous data sharing keeping them current with shifting conditions. Simulation and Analysis: Healthcare simulation models facilitate the simulation of intricate scenarios. Doctors are able to trial different treatment plans and predict outcomes before they are applied to actual patients through an iterative refinement process. Applications of Digital Twins in Healthcare Applications of digital twins in healthcare encompass almost all aspects of modern medicine: Personalized Medicine: Digital twins for personalized medicine create personalized copies of patients in such a way that physicians can design treatment based on the patient’s unique genetic and physiological makeup. Surgery Planning: Surgeons plan surgery with the help of digital twins in surgery planning in order to practice complex surgeries on virtual twins. Cardiovascular surgeons can experiment with different methodologies on a patient’s digital twin heart by simulating the process of drilling, reducing surgical risk. Patient Monitoring: Digital twins for patient monitoring enable real-time tracking of patients’ health and pre-diagnosis of illness by analyzing changes in physiological patterns. Medical Device Development: Digital twins for medical device innovation accelerate the development process by enabling virtual testing on different populations of patients at lower costs and enhanced safety. Drug Discovery: Digital twins in medical research accelerate drug development by enabling the capability to conduct virtual trials on virtual patients. Hospital Operations: Digital twin hospitals achieve the utmost resource utilization, patient flow, and operational efficiency by enabling the simulation of multiple scenarios. Book Your Free Marketing Consultation Benefits of Digital Twins in Medicine The benefits of digital twins in healthcare are revolutionary: Increased Accuracy: Digital twins enable treatments to be personalized for specific patients, considerably improving outcomes and reducing side effects. Risk-Free Trials: Doctors can try out different treatment options virtually without endangering patients. Early Diagnosis: Ongoing monitoring detects health conditions early before they can be treated most effectively. Improved Surgical Outcomes: Surgeons conducting operations on patient-specific digital twins do so with fewer complications and improved results. Accelerated Research: Virtual trials significantly reduce the time and expense of medical research and improve safety. Improved Operations: Hospital digital twins improve efficiency, reduce wait times, and maximize use of resources, hence reducing health care costs and boosting patient satisfaction. Challenges and Limitations As promising as digital twin technology is, there are challenges of digital twin technology in healthcare that are plentiful: Data Quality and Integration: Digital twins require high volumes of high-quality data. Medical systems suffer from most of them being plagued by data fragmentation and interoperability issues. Privacy and Security: Personal health data is gathered by digital twins, making them financially rewarding targets for cyberattack. Computational Complexity: Sophisticated digital twins must be built with immense computational capabilities out of the reach of most organizations. Cost and Infrastructure: Installation has enormous hardware, software, and trained staff costs. Future of Digital Twins in Healthcare Future of digital twins in healthcare bring disruptive innovation: Whole-Body Digital Twins: Future designs will incorporate multiple organ-specific twins into composite models to enable global health management. AI-Powered Monitoring: Digital twins and AI in healthcare will enable digital twins to monitor patients autonomously and predict health events. Precision Prevention: Digital twins will enable preventive treatment by detecting risk factors decades in advance before problems arise. Real-Life Applications: Some real-world examples of digital twins in healthcare are the Living Heart Project, which is employed in cardiovascular disease research, and digital twin hospitals by Siemens Healthineers. The European Virtual Human Twin project attempts to create holistic digital twins for personal medicine. Conclusion Digital twins represent a paradigm change from population-based, reactive medicine to proactive, individualized health care. While there are extremely challenging problems in data integration, privacy, validation, and expense, the value potential makes digital twins one of technology’s greatest promises to healthcare. As computer power rises and artificial intelligence continues to improve, digital twins will be a part of medical mainstreaming, transforming disease prevention, diagnosis, and treatment. FAQs How well do digital twins predict the patient outcome? Accuracy varies by data quality and model sophistication, but advanced digital twins can predict the outcome as accurately as 80-90%, increasing incrementally with the addition of each data set. Are hospitals already using digital twins? Yes, the majority of the world’s top medical centers already use digital twins to preplan surgery, treat chronic disease, and optimize operations, but mass deployment is at hand. Will digital twins replace doctors? No, digital twins are decision-assistance devices that complement but do not

Contact Center Automation in Healthcare: Enhancing Patient Experience and Operational Efficiency The healthcare industry is experiencing a record transformation with the emergence of digital technology and patient-centric care models. Under such circumstances, contact center automation in healthcare has emerged as a strong measure to rationalize patient communication, check operational inefficiencies, and improve overall experience. Hospitals and clinics are spending increasingly on online platforms and automated technologies in order to respond to the growing demand for speedier, improved quality medical services. This strategy not only maximizes patient satisfaction but also enables health professionals to use resources optimally. What is Contact Center Automation? Contact center automation refers to the application of artificial intelligence (AI), machine learning, and digital technology to handle and automate the communication between patients and healthcare professionals. The operational disadvantages of call centers are high hold times, high call volume, and human errors. Automations handle these by leveraging sophisticated systems like contact center AI chatbots for healthcare, automated voice response systems, and converged systems to carry out tasks like appointment setting, prescription refill, and answering FAQs. Why Does Healthcare Need Contact Center Automation? Healthcare professionals practice in a context where time and precision are of the essence. Latent patient communication and inept handling of questions affect health outcomes and erode trust in healthcare systems. Healthcare call center automation solutions are needed since hospitals and clinics can handle high call volumes without sacrificing the quality of service. Further, automated support is available 24/7, and this is more crucial for patients who need instant guidance. The second major reason is increasing pressure for personalized medicine. Patients expect to have smooth interactions, either to book appointments, obtain medical information, or follow up. AI in healthcare contact centers provides instant responses and appropriate information to every patient without overburdening healthcare professionals. Applications of Contact Center Automation in the Healthcare Industry ● Appointment Scheduling One of the greatest successes in implementation is appointment contact center automation for appointment scheduling. Automated procedures enable patients to schedule, reschedule, or cancel an appointment through voice assistants, chatbots, or web portals. This minimizes reliance on human personnel while providing patient convenience. ● Patient Communication Patient communication automation provides timely reminders for vaccine schedules, lab test results, follow-up visits, or medication. Automated reminders minimize no-shows and enhance treatment adherence. ● Billing and Insurance Queries Automation can give real-time feedback by the minute on billing, insurance, and payment schedules without administrative hassle and confusion. ● Multi-Channel Communication Healthcare contact centers today deploy omnichannel automation in healthcare contact centers for communicating with patients via call, SMS, email, mobile app, and web chat. The common platform provides the same experience through every channel. ● Emergency Triage Support Automaton software is also capable of sorting and prioritizing patient questions right away, marking the same as priority for the medical staff to address promptly. ● Workflow Management Contact center workflow automation in healthcare eliminates tasks like updating patient records, handling insurance information, and call routing to the right department. Benefits of Contact Center Automation ● Lower Waiting Times Among the major Benefits of contact center automation in healthcare is minimizing reducing wait times with healthcare contact center automation. The patients no longer have to wait for long on the telephone; automated software gives the solution forthwith or connects them to the appropriate individual. ● Operational Efficiency Contact center automation and healthcare efficiency go hand in hand because automation reduces human error, manual repetitive tasks, and improves employees’ productivity. ● Improved Patient Experience Through real-time feedback and one-to-one conversations, contact center automation and patient experience are greatly enhanced. Patients appreciate being treated with dignity and respect during their medical experience. ● Cost Savings Automation eliminates the need of big call center staff, reducing the cost of operations while still maintaining high-quality service. ● Better Resource Utilization With repetition handled by automations, healthcare professionals enjoy the luxury of dealing with more urgent matters such as direct patient care. ● Data-Driven Insights Reports and analytics from automated systems assist organizations in grasping the needs of patients, recognizing bottlenecks, and enhancing services. ● Scalability Hospitals can scale contact center automation software in healthcare with ease and grow in demand by scaling to serve more patient inquiries without making enormous infrastructure investments. Book Your Free Marketing Consultation Challenges & Considerations While the advantages of contact center automation to the healthcare sector are wide-reaching, there are challenges: Data Security and Privacy: Keeping sensitive patient data secure is of top priority while implementing automation. Interfacing with Other Systems: Some of the hospitals have already implemented Electronic Health Record (EHR) systems, so interfacing with new solutions being challenging. Human Contact vs. Automation: Certain patients like to have a human touch, particularly for sensitive medical matters. Implementation Cost: Training and installation do come with cost and time, and this could be a dilemma to smaller health organizations. Regulatory Compliance: Software utilized in automations needs to comply with healthcare regulations like HIPAA or GDPR to safeguard patient confidentiality and safety. Future of Contact Center Automation in Healthcare The future of contact center automation in healthcare looks promising, with artificial intelligence and machine learning becoming more critical factors. Predictive analysis will enable providers to predict patient needs in advance, while voice recognition software will enable more human-like and natural communication. Telemedicine platforms will be coupled with automation, with seamless digital healthcare ecosystems being created. There will be additional intelligent triage systems, sophisticated sentiment analysis, and preventive patient engagement programs facilitated by emerging technologies. Contact centers will be smart centers as a consequence, where automation harmonizes with human smartness to deliver quality patient care. Conclusion Healthcare is a sector where smooth functioning and effective communication are given utmost priority. Automated health care call center solutions do not only automate patient interaction to contact center workflow automation in healthcare, lower expenditures, and boost the level of patient satisfaction as well. Be it automating patient communication or automating health

High-Performance Computing in Healthcare: Transforming Medicine with Data-Driven Innovation The healthcare sector is witnessing a computing revolution. Right from the human genome mapping to the design of life-saving drugs in history-making time scales, HPC has been the unseen force behind the most glorious developments in medicine today. With healthcare generating more data than ever before, the ability to analyze and process it has become the machine needed for more medical research and patient treatment. What is High-Performance Computing (HPC)? High-performance computing is the use of parallel processing principles and supercomputers to deliver solutions to computationally intensive problems at rates several orders of magnitude quicker than standard computing hardware. A standard computer is only capable of having a single processor, yet an HPC system has thousands or millions of processors that run concurrently to carry out enormous calculations. These supercomputers in healthcare research are petaflop speeds (quadrillions of calculations per second). Modern HPC environments include on-premises supercomputing, cloud-based HPC solutions for healthcare, and hybrid models combining the two methods, offering computational power to small- to large-sized institutions. “High-performance computing has become the backbone of modern medical research, enabling discoveries that were impossible just a decade ago.” – Healthcare Technology Expert Applications of HPC in Healthcare HPC in Genomics The human genome consists of approximately 3 billion base pairs, and information from a single sequenced genome yields over 200 gigabytes of raw data. HPC in genomics enables scientists to compare a thousand genomes simultaneously and determine patterns of drug response variability and disease susceptibility rapidly. High Performance Computing for Drug Discovery Traditional pharmaceutical development is a billion-dollar, decade-long endeavor. High performance computing for drug discovery enables hundreds of millions of potential drugs to be virtually screened against disease targets. Scientists utilized HPC to model the COVID-19 virus spike protein structure and screen for candidate therapeutic compounds—accelerating years of effort into months. HPC in Medical Imaging HPC in medical imaging provides instant image reconstruction and sophisticated analysis that detects minor abnormalities beyond human vision. Machine learning software processes dozens of medical images on HPC infrastructures to learn disease patterns and diagnoses to become more precise with virtually zero interpretation time. HPC for Disease Modeling HPC for disease modeling facilitates the simulation of disease transmission across populations and testing intervention methods. Such models employ a variety of variables in creating realistic situations that have the capacity to affect effective policy action towards public health emergencies. HPC for Precision Medicine HPC for precision medicine takes genomic information, electronic health records, environmental exposures, and response to treatment, and uses this to establish the ideal treatment for an individual patient. Personalized medicine requires huge amounts of data to be processed to identify biomarkers, treatment response, and identify appropriate clinical trials for patients. By scrutinizing patient information thoroughly, HPC systems help doctors select the best available therapy with the least side effects. The technology allows for on-the-fly analysis of the genetic variations that influence drug metabolism so that doctors can adjust dosages and select drugs based on patients’ unique profiles. Precision medicine is realized as a clinical reality because of this. Benefits of HPC in Healthcare The benefits of HPC in healthcare are transformative. Computation speed makes it possible to analyze taking months to run in hours or a few days. HPC computers can process tens of thousands more parameters than standard approaches, leading to more accurate prediction, diagnosis, and treatment. Being able to analyze the whole dataset rather than samples removes bias and increases statistical power. While infrastructure is expensive, HPC ultimately pays for healthcare by accelerating drug development, streamlining diagnosis, and enabling treatments for prevention. Virtual screening and simulation reduce expensive physical tests and clinical trials considerably. HPC for medical research promotes global collaboration, with researchers able to share computational resources and data. Arguably most significantly, HPC for big data in healthcare offers the only feasible answer to the huge datasets created by contemporary medicine, so 30% of the world’s volume of data is created in healthcare. Book Your Free Marketing Consultation Challenges and Limitations As vast as its potential is, the challenges of HPC in healthcare are enormous. Multimillion-dollar annual capital investment in supercomputer specialty facilities is necessary. Operation of the HPC platforms necessitates computational science expertise and healthcare expertise blended together a combination as rare as it is necessary and thereby producing a human resource shortage. Data privacy is still a concern, i.e., HIPAA and GDPR compliant cloud solutions. Supercomputers are humongous power guzzlers with their carbon footprint. Medical data will not be normalized and error-ridden, and requires heavyweight pre-HPC analysis scrubbing. Ethical issues of algorithmic bias and prediction exploitation of data necessitate cautionary governance solutions. Future of HPC in Healthcare The future of HPC in healthcare is filled with amazing breakthroughs. Combination with quantum computing has the potential to revolutionize drug discovery with the capability to simulate molecular interactions with an accuracy hitherto unimaginable. Combination with clinical high-performance computing in AI in healthcare will similarly continue to accelerate to make achievements in diagnostic and treatment planning. Edge computing can de-localize compute resources close to data sources enabling in-care real-time analysis. HPC solutions in the cloud will continue to provide access for smaller organizations to participate in leading research. Future HPC systems will enable “digital twins”−computer surrogates for patients used to forecast treatment outcomes before application. Future systems will be low-energy in green energy and optimized architecture. Conclusion High-performance computing has evolved from a specialized research setting to a fundamental pillar of healthcare. The HPC applications in healthcare are transforming medicine with sped-up drug development, tailored treatments, improved diagnosis, and disease simulation. With expanding innovation and availability, HPC will become a critical part of healthcare provision. Further investment into HPC hardware will have direct implications for better health outcomes, faster treatments, and indeed, lives saved. FAQs Q: In what way does HPC differ from healthcare cloud computing? Cloud computing provides on-demand access to the hardware, whereas HPC is specifically designed for large-scale parallel processing. Cloud HPC makes use of both. Q: Can small